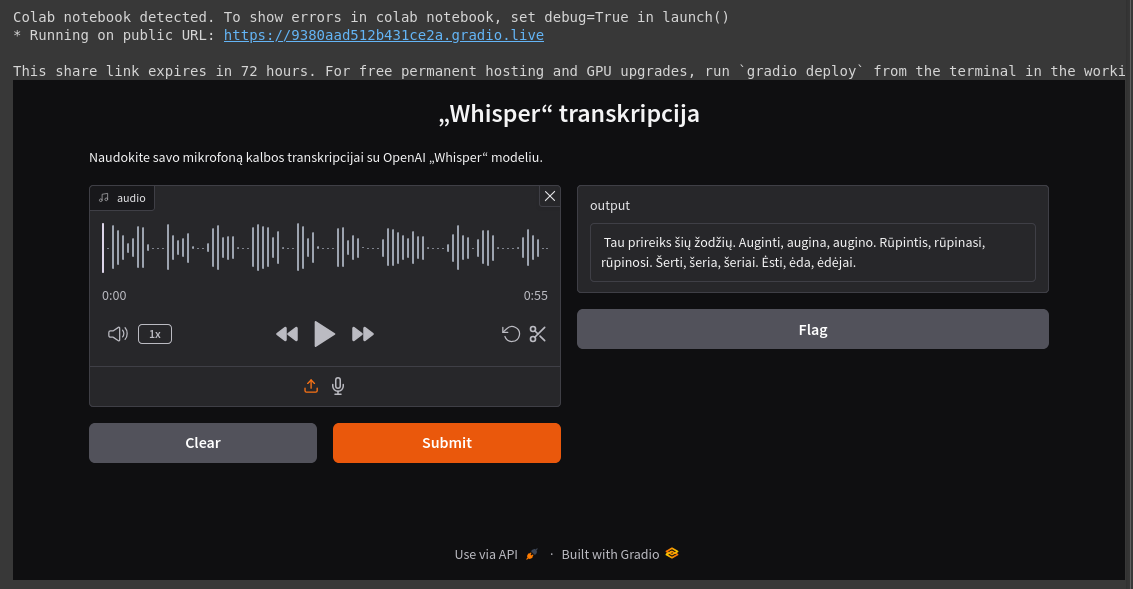

"Whisper" Model with the "Gradio" interface for Lithuanian language recognition

In this article discuss how to use the "OpenAI Whisper" model with the "Gradio" library for Lithuanian language transcription. The code will be run in the "Google Colab" environment using the Python programming language, allowing easy testing without complex configuration on a computer.

"Whisper" is a powerful speech recognition model capable of transcribing and translating multiple languages, including Lithuanian. It is highly accurate and fast, making it an excellent choice for automatically transcribing long audio or video recordings.

"Gradio" is a Python programming language library designed for quick and easy interfaces for AI models. By using "Gradio," we can create an intuitive user interface that allows others to try out the "Whisper" model. This library is particularly useful for demonstrating model capabilities, building prototypes, or integrating models into existing projects.

Preparation

First, we need to install the "Whisper" and "Gradio" libraries. This can be done using the pip package installation tool:

!pip install git+https://github.com/openai/whisper.git

!pip install gradio

Next, we import these libraries into our Python environment:

import whisper

import gradio as gr

Loading the Model

The "Whisper" library offers various pre-trained models that differ in size, speed, and accuracy. In this example, we use the "large" model, but you can choose other options: "tiny," "base," "small," or "medium." The larger the model, the more accurate it is.

model = whisper.load_model("large")

Transcription Function

Let's create a function that will use the "Whisper" model to transcribe the given audio recording:

def transcribe(audio):

result = model.transcribe(audio)

return result["text"]

Gradio Interface

Using the "Gradio" library, let’s create a simple user interface that allows audio recording using a microphone or uploading an audio file to obtain its transcription:

iface = gr.Interface(

fn=transcribe,

inputs=gr.Audio(type="filepath"),

outputs="text",

title="Whisper Transcription",

description="Use your microphone for speech transcription with the OpenAI Whisper model."

)

iface.launch()

This interface will have audio input and return a text result. After calling the launch() method, the "Gradio" interface will be accessible in the browser and available at a generated unique URL.